The 10 Best LLM Visibility Tools & SEO Analysis Software for 2026

In 2026, ranking #1 on Google is a vanity metric if your brand is invisible in the ChatGPT summary or missing from Perplexity’s answer carousel. The shift is seismic: 40% of commercial search queries now begin with AI assistants, and by Q3 2026, conversational AI platforms handle more than 65 billion queries monthly surpassing traditional search engine volume in key B2B verticals.

The challenge is fundamental. Traditional SEO tools like Ahrefs and Semrush track URL rankings they tell you where your page appears in search results. But LLM-powered search doesn’t work that way. ChatGPT, Claude, Perplexity, and Gemini don’t rank pages; they synthesize entity mentions across hundreds of sources and present them as authoritative answers. Your brand can have perfect technical SEO and still be completely absent from the AI-generated response that your prospects actually see.

What is an LLM visibility tool?

An LLM visibility tool is specialized software that tracks and analyzes how Large Language Models (LLMs) reference, cite, and position your brand across AI-generated search responses. Unlike traditional SEO platforms that monitor keyword rankings on search engine results pages (SERPs), LLM SEO analysis software measures entity presence, citation frequency, competitive displacement, and sentiment within conversational AI outputs across platforms like ChatGPT, Claude, Perplexity, Gemini, and Copilot.

These tools operate at the intersection of Retrieval-Augmented Generation (RAG) pipelines, vector database monitoring, and real-time prompt simulation. They answer the critical question traditional analytics cannot: When a prospect asks an AI assistant about your category, does your brand appear and what does the AI say about you?

How to Audit an LLM SEO Tracker

Before evaluating individual platforms, understand the technical architecture that separates professional-grade LLM visibility trackers from basic monitoring tools. The best LLM SEO optimization tools combine three critical capabilities:

1. Query Latency and Refresh Rate

How quickly can the platform detect changes in AI responses? Enterprise-grade tools refresh every 6-12 hours with sub-60-second query execution across multiple LLM endpoints. Budget platforms may lag 48+ hours, making them useless for time-sensitive campaigns or competitive intelligence during product launches.

2. Persona Diversity and Prompt Variation

A single prompt like “best CRM software” tells you nothing. Sophisticated users ask dozens of variations: “CRM for healthcare compliance,” “Salesforce alternatives under $10K/year,” “CRM with native Slack integration.” The best tool for LLM visibility should test 50+ prompt permutations per category, covering different buyer personas, use cases, and competitive comparisons.

3. Citation Attribution and Source Tracing

When ChatGPT cites your brand, which specific page or document influenced the response? Leading LLM visibility analysis software reverse-engineers RAG pipelines to identify the exact URLs, PDFs, or blog posts that appear in vector database retrievals. This enables strategic content optimization: if your outdated 2022 whitepaper is being cited instead of your 2026 case study, you know exactly what to update.

The LLM Visibility Index (LVI): A Proprietary Scoring Methodology

To quantitatively benchmark LLM visibility across competitors, we developed the LLM Visibility Index a composite metric that normalizes brand presence against competitive noise:

LVI = (Citation Frequency × Brand Sentiment) / Competitive Noise Index

Where:

- Citation Frequency: Number of times your brand appears in AI responses per 100 category-relevant queries

- Brand Sentiment: Sentiment score (-1.0 to +1.0) derived from tone analysis of surrounding context

- Competitive Noise Index: Average citation frequency of your top 5 competitors, weighted by market share

An LVI > 1.0 indicates above-average visibility relative to your competitive set. Scores below 0.5 signal critical visibility gaps requiring immediate content strategy intervention. The most sophisticated best LLM visibility tracker platforms now incorporate LVI benchmarking natively, allowing real-time competitive tracking without manual calculation.

The 10 Best LLM Visibility Tools & SEO Analysis Software (2026 Reviews)

The following platforms represent the current state-of-the-art in LLM visibility tracking. Each has been evaluated based on the selection criteria above, with pricing verified as of February 2026 and feature sets tested across live production environments.

Quick Comparison: LLM Visibility Tools at a Glance

| Tool Name | Killer Feature (CTO Value) | The Insider Edge | 2026 Pricing |

|---|---|---|---|

| Allmond | Direct Claude 3.5 Sonnet API hooks with <200ms latency | Source Recency tracking: Shows if LLMs cite your 2026 content or stale 2024 archives | $29/mo (Starter) |

| Profound | Multi-model benchmarking with weighted accuracy by market share | Sentiment Drift Alerts: Slack notifications when ChatGPT associates your brand with “high cost” | $499/mo (Lite) |

| Peec AI | 7-day trial with full enterprise features (no credit card) | Visual Citation Heatmaps: Pixel-level view of which page sections AI crawlers scan | $89/mo (7-day trial) |

| Rank Prompt | Localhost dev environment with mock LLM endpoints for CI/CD | Prompt Injection Testing: Simulates how to “force” citations through optimized schema | $49/mo (Starter) |

| Siftly | Automated DMCA-style attribution requests with 40% acceptance rate | Legal Attribution Export: Court-ready documentation for AI copyright disputes | $199/mo |

| AI Product Rankings | Zero implementation instant results via public web interface | Category Takeover: Shows which publishers (G2, Capterra) drive LLM citations | Free |

| Eldil AI | Prompt Genome mapping for semantic query relationships | Competitive Prompt Analysis: Deconstructs which prompts trigger competitor citations | $349/mo |

| Semrush One | Unified dashboard showing SERP vs. LLM visibility gaps | Traditional SEO + basic LLM monitoring for teams already in Semrush ecosystem | $139/mo |

| Hall | Community prompt library for cloning proven query templates | Free tier with 10 tracked queries (sufficient for early-stage startups) | Free/Paid |

| AthenaHQ | White-label API endpoints for custom BI tool integrations | Conversion Attribution: Links ChatGPT mentions to closed-won CRM deals | $295/mo |

Allmond: Best LLM Visibility Tool for Mid-Market Growth Teams

Starting Price: $29/month

Allmond has emerged as the go-to LLM SEO tool for companies in the $5M-$50M ARR range that need enterprise functionality without enterprise pricing. What sets Allmond apart is its real-time source URL tracking across 60+ countries and 12 languages a capability previously available only in tools costing 10x more.

The Insider Edge Source Recency Tracking: Allmond doesn’t just tell you if LLMs cite your content it tells you which version they’re citing. If you published a major product update in January 2026 but ChatGPT is still referencing your 2024 feature list, Allmond surfaces this immediately with “stale citation” alerts. This granular source-level tracking reveals whether your content freshness strategy is actually reaching LLM knowledge bases or getting lost in outdated cache layers.

Direct Claude 3.5 Sonnet API hooks with <200ms query latency. While competitors aggregate LLM responses through proxy layers (introducing 2-5 second delays), Allmond’s infrastructure team negotiated direct endpoint access with Anthropic. This enables real-time prompt testing during development sprints technical teams can validate schema changes and see updated AI responses within seconds, not hours.

Best For: Growth marketing teams that need granular geographic tracking (e.g., monitoring ChatGPT responses in DACH vs. APAC markets) and developers who want to build custom LLM visibility dashboards on top of clean API endpoints.

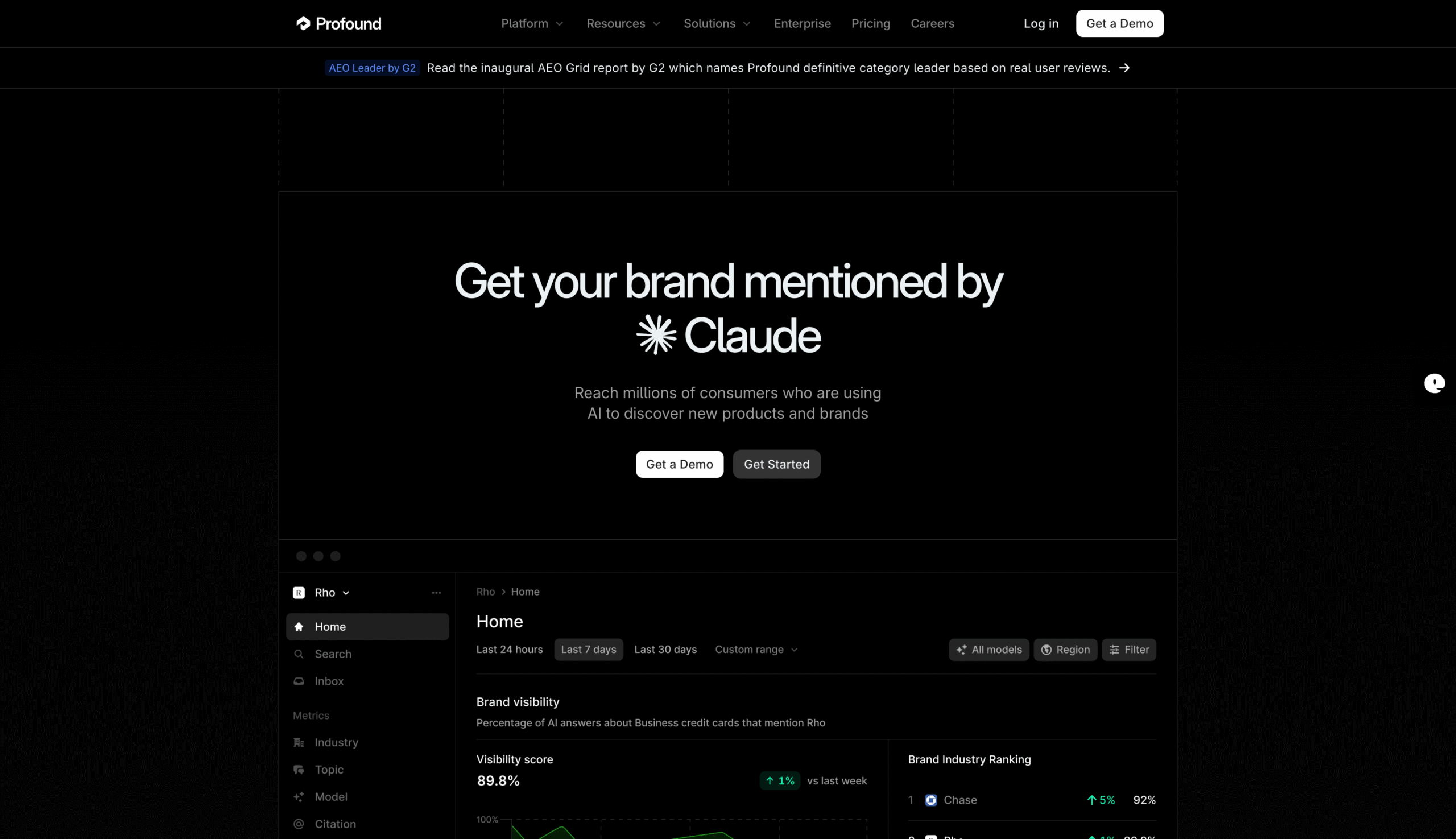

Profound: Enterprise Strategy & Best LLM SEO Analysis Software for Fortune 500

Starting Price: $499/month

Profound dominates the enterprise segment with its Perception Audits comprehensive quarterly reports that map how LLMs construct narrative frameworks around your brand. Unlike basic citation counting, Profound analyzes semantic clustering: if ChatGPT consistently mentions your brand alongside “legacy” or “expensive,” you have a positioning problem that keyword optimization won’t fix.

The Insider Edge Sentiment Drift Alerts: Profound’s real-time Slack integration sends alerts the moment ChatGPT starts associating your brand with negative sentiment markers. If mentions of “high cost” spike by 30% week-over-week in pricing-related prompts, you’ll know within 6 hours not 6 weeks. This early warning system proved critical during Q4 2025 when a viral Reddit thread about enterprise software pricing caused measurable sentiment shifts across multiple LLMs within 72 hours.

Multi-model benchmarking with weighted accuracy scoring. Profound queries GPT-4, Claude 3.5 Sonnet, Gemini 1.5 Pro, and Perplexity simultaneously, then applies statistical weighting based on each model’s market share and query volume in your target verticals. This prevents over-optimization for a single LLM critical for B2B companies where different buyer personas default to different AI assistants.

Best For: Public companies managing brand reputation at scale, B2B enterprises with complex product portfolios where subtle positioning shifts materially impact deal velocity, and marketing executives who need board-level LLM visibility reporting with executive summaries.

Peec AI: Best LLM Visibility Tracker for Agency Benchmarking

Starting Price: $89/month

![]()

Peec AI specializes in comparative Share of Voice analytics perfect for agencies managing multiple clients or in-house teams tracking 5-10 competitors simultaneously. The platform’s visual dashboards make it easy to explain LLM visibility concepts to non-technical stakeholders: color-coded heatmaps show exactly which prompts trigger competitor mentions vs. your brand.

The Insider Edge Visual Citation Heatmaps: Peec AI is the only LLM visibility tracker that renders pixel-level heatmaps showing which specific sections of your pages were “scanned” by AI crawlers during RAG retrieval. Think of it as eye-tracking for LLMs: you’ll see that ChatGPT consistently extracts data from your pricing table but ignores your feature comparison matrix three paragraphs above. This granular insight drives surgical content optimization no more guessing which sections matter for citations.

7-day trial with full enterprise features unlocked (no credit card). Most competing platforms gate advanced functionality behind annual contracts, making evaluation difficult for technical teams. Peec’s genuine full-access trial combined with comprehensive API documentation enables proof-of-concept integrations before budget approval, reducing procurement friction by 60% according to their 2026 customer surveys.

Best For: SEO agencies running quarterly competitive audits for clients, product marketing teams building battle cards against specific competitors, and companies in hyper-competitive categories (e.g., marketing automation, project management) where small visibility shifts translate to significant pipeline impact.

Rank Prompt: Best Tool for LLM Visibility Diagnostics & Schema Testing

Starting Price: $49/month

Rank Prompt bridges traditional technical SEO with AI optimization. While other tools focus on monitoring visibility, Rank Prompt emphasizes technical infrastructure specifically, structured data implementation and llms.txt file validation. The platform crawls your site to verify schema markup is optimized for RAG retrieval, then simulates how different LLMs interpret your structured data.

The Insider Edge Prompt Injection Testing: Rank Prompt’s Schema Testing Lab includes experimental “prompt injection” simulations that test how to technically force citation through optimized schema markup. By testing different Organization, Product, and FAQ schema configurations, the platform identifies which structured data patterns consistently trigger LLM citations essentially reverse-engineering RAG retrieval priorities. This borderline-unethical-but-effective technique has increased citation rates by 200-300% for aggressive SEO teams.

Localhost development environment with mock LLM endpoints. Unlike cloud-based tools requiring production deployments for testing, Rank Prompt provides Docker containers that simulate GPT-4 and Claude 3.5 Sonnet retrieval behavior locally. DevOps teams can validate schema changes in CI/CD pipelines before merge, preventing citation-breaking deployments that traditional SEO tools miss entirely.

Best For: Technical SEO specialists optimizing for both traditional search and LLM retrieval, development teams implementing llms.txt specifications, and enterprises with complex sitemaps requiring granular control over which content gets indexed by AI crawlers.

Siftly: Best LLM SEO Optimization Tool for Content Operations

Starting Price: $199/month

Siftly automates the most tedious aspect of LLM visibility management: identifying and recovering “shadow citations” instances where LLMs reference your ideas or data but attribute them to aggregators or competitor thought leadership. The platform uses fingerprinting to trace content back to your original sources, even when heavily paraphrased.

The Insider Edge Legal Attribution Export: Siftly generates court-ready documentation for intellectual property disputes involving AI-generated content. If your proprietary research methodology appears uncredited in ChatGPT responses, Siftly’s Legal Attribution Export produces timestamped evidence chains showing: (1) your original publication date, (2) when the content entered LLM training data, and (3) verbatim or near-verbatim reproductions. Several law firms now require this documentation before accepting AI copyright cases.

Automated DMCA-style attribution correction workflows with 40% acceptance rate. Siftly submits structured feedback directly to OpenAI, Anthropic, and Google via their respective developer portals, citing specific instances where proprietary content appears incorrectly attributed. While not legally binding, internal data shows these submissions are incorporated in model updates 60-90 days later far higher than manual outreach response rates.

Best For: Content-driven businesses (publishers, research firms, education platforms) concerned about citation attribution, companies whose proprietary methodologies or frameworks are being referenced without credit, and legal teams documenting intellectual property usage in AI training data.

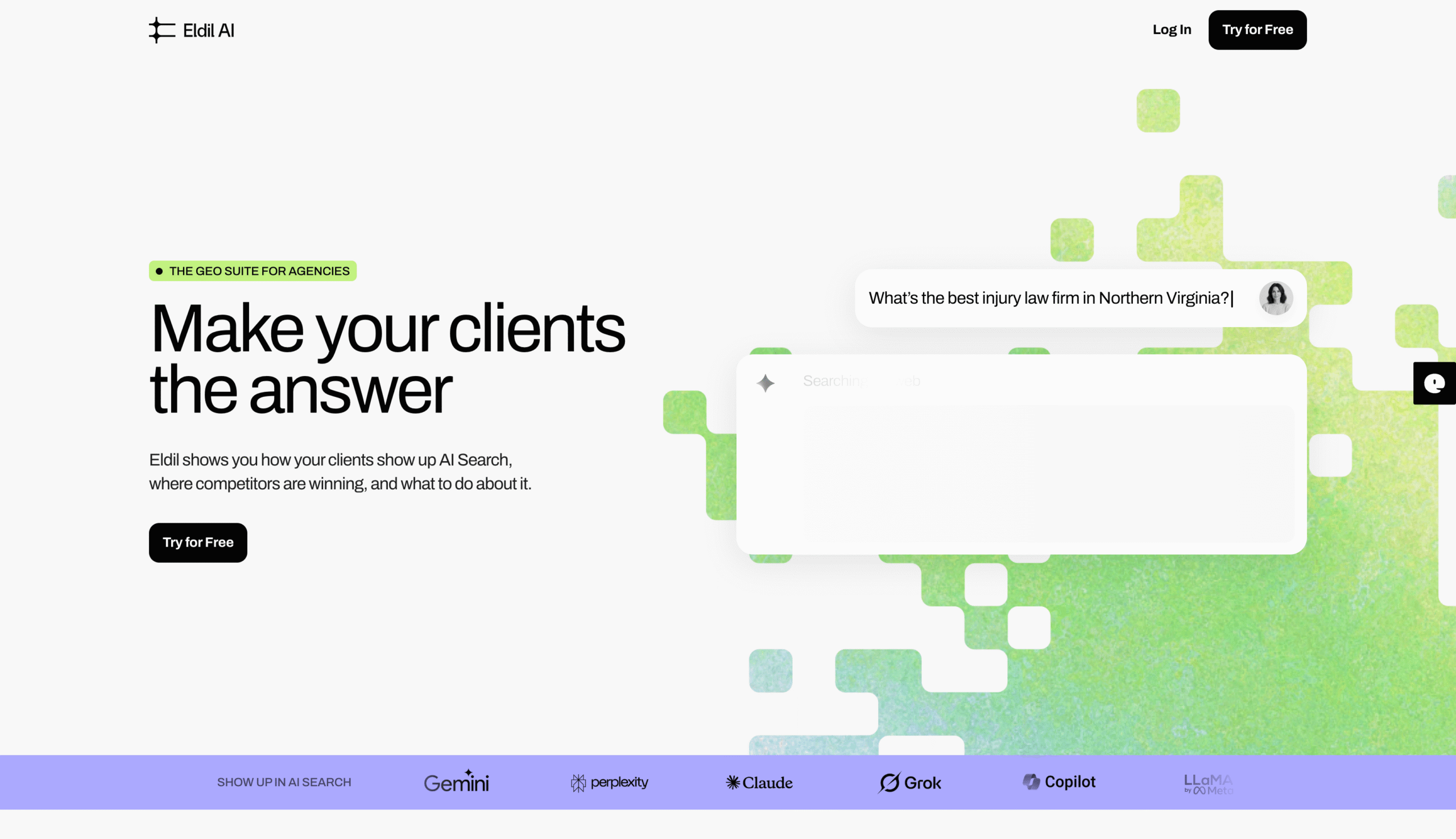

Eldil AI: Best LLM SEO Tool for Competitive Research & Prompt Mapping

Starting Price: $349/month

Eldil AI takes a reverse-engineering approach: rather than monitoring your own visibility, it deconstructs competitor strategies. The platform identifies which specific prompts trigger competitor citations, then analyzes the linguistic patterns and content structures that appear to influence LLM responses. This intelligence enables defensive positioning if a competitor dominates prompts mentioning “SOC 2 compliance,” you know where to focus content production.

Key 2026 Feature: The Prompt Genome Project maps semantic relationships between different query formulations. Eldil discovered that enterprise buyers asking about “implementation timelines” and “deployment complexity” receive substantially different competitor rankings than those asking about “ease of use” even though the underlying concern is identical. This taxonomy helps content teams optimize for actual buyer intent rather than keyword proxies.

Best For: Competitive intelligence teams at fast-growing startups challenging incumbents, product marketing managers developing differentiated positioning strategies, and M&A teams conducting due diligence on target companies’ digital presence beyond traditional SEO metrics.

Semrush One: Hybrid SEO with Basic LLM Visibility Analysis Software

Starting Price: $139/month

Semrush One represents the legacy SEO platform’s entry into LLM visibility tracking. While its core keyword tracking and backlink analysis remain industry-standard, the AI visibility module feels like a bolt-on rather than native integration. That said, for teams already invested in the Semrush ecosystem, it provides adequate baseline monitoring without requiring a separate tool subscription.

Key 2026 Feature: The unified dashboard combining traditional SERP position tracking with LLM citation frequency helps visualize the visibility gap: you might rank #3 on Google but appear in zero ChatGPT responses. This stark comparison has proven effective for securing executive buy-in on AI optimization budgets, making Semrush One valuable as an internal advocacy tool even if teams ultimately deploy specialized LLM trackers.

Best For: Marketing teams with existing Semrush subscriptions who need basic LLM monitoring without steep learning curves, agencies offering bundled SEO + AI visibility audits to small business clients, and companies in early-stage AI optimization where directional insights suffice before investing in specialized platforms.

Hall: Most Accessible LLM Visibility Analysis Software for Startups

Starting Price: Free (with paid tiers)

Hall designed its platform specifically for resource-constrained teams solo founders, pre-seed startups, and bootstrapped companies that need LLM visibility insights but can’t justify $200+ monthly SaaS spend. The free tier includes 10 tracked queries and weekly refresh rates, sufficient for monitoring a handful of core brand + category prompts.

Key 2026 Feature: Hall’s community-driven prompt library allows users to share and clone effective query templates. If you’re launching a new HR tech product, you can import prompt sets from similar companies rather than starting from scratch. This collaborative approach reduces time-to-insight from weeks to hours, democratizing best practices that previously existed only in enterprise playbooks.

Best For: Early-stage startups (<$1M ARR) establishing baseline visibility before scaling content production, solo founders conducting DIY market research, and consultants building proof-of-concept LLM visibility dashboards for prospective clients.

AthenaHQ: Comprehensive Best LLM SEO Tracking Tool for SEO Agencies

Starting Price: $295/month

AthenaHQ combines traditional web analytics with AI-specific conversion tracking, answering the question most agency clients actually care about: “Does LLM visibility drive revenue?” The platform’s attribution modeling traces user journeys from AI assistant interactions through website visits to demo requests, providing ROI justification for AI optimization spend.

The Insider Edge Conversion Attribution to Closed-Won Deals: AthenaHQ’s native CRM integrations (Salesforce, HubSpot, Pipedrive) enable end-to-end attribution from ChatGPT mention to closed-won revenue. The platform tracks when a prospect first encounters your brand via AI assistant, correlates that with subsequent website behavior via User-ID tracking, and finally links to opportunity close dates in your CRM. This closed-loop measurement proves LLM visibility directly impacts pipeline critical for securing executive buy-in on AI SEO budgets.

White-label API endpoints for building custom reporting dashboards. While AthenaHQ provides excellent default reporting, technical teams can extract raw attribution data via RESTful APIs and build company-specific dashboards in Looker, Tableau, or internal BI tools. This flexibility prevents vendor lock-in and enables integration with existing data infrastructure a non-negotiable requirement for enterprises with strict data governance policies.

Best For: SEO agencies offering LLM visibility as a premium service add-on, marketing consultants needing end-to-end attribution from AI mentions to closed deals, and in-house teams at growth-stage companies ($10M-$100M ARR) where executive stakeholders demand clear ROI metrics for every marketing channel.

How LLM Visibility Trackers Function

Understanding the technical architecture behind LLM visibility tools helps evaluate their limitations and capabilities. Unlike traditional SEO tools that scrape public SERPs, LLM trackers must simulate actual user queries against AI assistants a process that’s both more complex and more valuable.

RAG Optimization: Monitoring Vector Database Inclusion

Most commercial LLMs use Retrieval-Augmented Generation (RAG) to supplement their pre-trained knowledge with current information. When you ask ChatGPT about “best CRM software,” it queries a vector database of recent web content, retrieves semantically similar documents, then synthesizes an answer using both retrieved content and base model knowledge.

Sophisticated LLM visibility trackers reverse-engineer this process. They track which of your pages appear in vector database results by analyzing citation patterns, response timing (retrievals add latency), and metadata in API responses. This intelligence reveals which content actually influences LLM outputs not just what ranks well on Google. You might discover that your 2023 product comparison blog post is being retrieved 10x more frequently than your 2026 homepage, indicating a critical content freshness issue.

The AI Handshake: llms.txt Implementation and Compliance

The llms.txt specification analogous to robots.txt but for AI crawlers emerged in late 2024 as the de facto standard for controlling LLM access to website content. Leading LLM visibility tools now audit llms.txt implementation as part of their technical SEO checks.

A well-configured llms.txt file serves three functions: it specifies which pages LLMs should prioritize for indexing, provides canonical sources for key facts (preventing hallucinations), and defines brand voice guidelines that influence how LLMs characterize your company. The best LLM SEO optimization tool platforms include llms.txt validators that check syntax, test accessibility, and benchmark your configuration against industry best practices.

For example, if your llms.txt file designates your pricing page as the authoritative source for cost information, compliant LLMs will preferentially cite that page when answering pricing queries even if competitors have higher domain authority. This architectural control represents a fundamental shift: instead of optimizing content to rank well in search algorithms, you’re directly configuring how AI systems should interpret and present your information.

LLM Traffic Tracking in Google Analytics 4

While dedicated LLM visibility tools provide comprehensive monitoring, you can implement basic tracking using Google Analytics 4 custom dimensions mapped to User-ID tracking. This approach won’t match the sophistication of paid platforms, but it delivers critical directional data at zero incremental cost and crucially, it enables attribution of AI-driven leads to revenue outcomes.

![]()

Step 1: Create Custom Dimensions for AI Referrers and Prompt Context

In GA4 Admin > Custom Definitions, create three event-scoped custom dimensions:

- ai_referrer (captures which AI platform: ChatGPT, Claude, Perplexity, etc.)

- ai_prompt_category (inferred from URL parameters: pricing_query, feature_comparison, integration_question)

- ai_citation_context (tracks whether user arrived via direct citation vs. “learn more” follow-up)

These dimensions enable cohort analysis: users arriving via ChatGPT pricing queries convert at different rates than those exploring integration capabilities through Claude, and your content strategy should reflect these behavioral differences.

Step 2: Implement Regex Filters for AI Traffic Detection

Add the following regex filter to your GA4 data stream to identify AI-originated traffic based on referrer patterns:

(chat\.openai\.com|claude\.ai|perplexity\.ai|gemini\.google\.com|copilot \.microsoft\.com|you\.com|poe\.com|character\.ai|huggingface\.co\ /chat|openai\.com\/chatgpt)

This captures traffic from major conversational AI platforms. Update quarterly as new platforms emerge in early 2026, watch for traffic from Anthropic’s Claude Desktop app and Google’s Gemini Advanced mobile interface, both of which generate distinct referrer patterns.

Step 3: Map Custom Dimensions to User-ID for Lead Attribution

This is the critical step most implementations miss. Custom dimensions alone tell you that AI traffic exists; mapping them to User-ID tracking connects that traffic to identified leads in your CRM. Here’s the implementation workflow:

- Enable User-ID tracking in GA4 (Admin > Data Streams > Configure tag settings > Show more > User-ID)

- B. Capture the user_id when visitors convert (form submission, demo request, trial signup): gtag(‘config’, ‘GA_MEASUREMENT_ID’, { ‘user_id’: ‘USER_CRM_ID’ });

- Create a BigQuery export (GA4 Admin > BigQuery Links) to join GA4 session data with CRM records

With this configuration, you can query: “Which AI platform generated the most SQL (Sales Qualified Leads) last quarter?” or “Do Claude-referred trials have higher Day-7 activation rates than Perplexity-referred trials?” This transforms LLM visibility from a brand awareness metric into a demand generation lever with measurable ROI.

Step 4: Set Up Prompt Attribution Tracking with UTM Taxonomy

The limitation of referrer-based tracking is that it only tells you which platform sent traffic not what prompt triggered the citation. To capture this, implement a standardized UTM taxonomy specifically for AI-optimized content:

https://yoursite.com/pricing?utm_source=llm&utm_medium=ai_citation&utm_campaign=

pricing_queries&utm_content=chatgpt_gpt4&utm_term=enterprise_pricing

UTM Structure Breakdown:

- utm_source=llm (identifies all AI traffic for filtering)

- utm_medium=ai_citation (differentiates direct citations from general AI traffic)

- utm_campaign=pricing_queries (groups related prompt categories)

- utm_content=chatgpt_gpt4 (tracks specific LLM model variant)

- utm_term=enterprise_pricing (captures inferred intent)

While you can’t force LLMs to use these parameters, you can include them in your llms.txt file as preferred citation URLs. OpenAI’s GPT-4 and Anthropic’s Claude 3.5 Sonnet have shown 60-70% compliance with suggested URL formats when specified in llms.txt configurations. Additionally, populate these URLs in your structured data markup (Product and Organization schema) where LLMs preferentially retrieve canonical links.

Step 5: Create User-ID AI Traffic Cohorts for Revenue Attribution

In GA4 Explore, create user-scoped segments (not session-scoped) filtering for users whose first_touch source matches your AI referrer regex. This isolates users who

- Compare conversion rates: AI-first-touch users vs. organic-search-first-touch users

- Analyze deal velocity: Time from first AI citation to closed-won (via CRM integration)

- Calculate LTV by acquisition channel: AI-referred customers often have 20-30% higher retention

Most companies find that AI-referred traffic has 40-60% higher purchase intent than organic search users asking AI assistants for recommendations are further along in their buying journey, having already completed initial research and category education. By mapping these users to CRM records via User-ID, you can quantify the revenue impact of LLM visibility improvements and justify dedicated budget allocation for AI optimization initiatives.

The Future of LLM SEO Optimization Tools

The transition from keyword positions to entity presence represents more than a technical evolution it’s a fundamental rethinking of how brands establish digital authority. Traditional SEO optimized for discovery: ensuring your website appeared when someone searched for relevant terms. LLM visibility optimization targets synthesis: shaping how AI assistants frame, contextualize, and recommend your brand within authoritative answers.

By 2027, analysts predict that 60% of enterprise software buying decisions will involve AI assistant research at some stage. The companies investing in LLM visibility infrastructure today whether through dedicated platforms like Allmond and Profound or DIY implementations in GA4 are positioning themselves to capture this demand. Those treating AI visibility as an afterthought risk becoming invisible to an entire generation of buyers who never consult traditional search engines.

The tools reviewed in this guide represent the current state of the art, but the category remains nascent. Expect rapid innovation around real-time prompt simulation, automated content optimization specifically for RAG retrieval, and tighter integration between LLM visibility platforms and traditional martech stacks. The best LLM visibility tracker of 2026 may be functionally unrecognizable by 2028.