The Best Ways to Track Brand Mentions in AI Search: 2026 Buyer’s Guide

In 2026, a brand mention in a ChatGPT response is worth more than a #1 ranking on Google. Why? Because the user never leaves the chat. Welcome to the zero-click crisis—where your brand’s visibility is measured not in page views, but in how often AI models cite you in their synthesized answers.

Traditional SEO metrics are becoming obsolete. The new battleground is “Share of Model” (SoM)—your brand’s representation across ChatGPT, Claude, Gemini, Perplexity, Grok, and every other AI platform reshaping how people find information. This guide explores the best ways to track brand mentions in AI search, revealing the tools, strategies, and methodologies enterprise brands need to defend their reputation in the age of generative AI.

However, tracking your performance is only the diagnostic phase; to actually move the needle, you must implement the technical and strategic framework for how to improve brand visibility in AI search engines to ensure your entity is correctly cited and prioritized by Large Language Models (LLMs).

Quick Comparison: Top AI Search Monitoring Tools (2026)

| Tool | Starting Price | Refresh Rate | Best For | Sentiment Analysis | Multimodal Support | API Access |

|---|---|---|---|---|---|---|

| Siftly.ai | $499/mo | Real-time | Citation tracking | Advanced | Yes (Beta) | Enterprise only |

| Semrush One | $349/mo | Daily | SEO + AI hybrid | Basic | Limited | Pro tier+ |

| Ahrefs Brand Radar | $299/mo | Weekly | Link attribution | Moderate | No | Limited |

| Profound AI | Custom | Real-time | Enterprise scale | Advanced | Yes (Full) | Full API suite |

| Nightwatch | $199/mo | Hourly | Prompt-level | Moderate | No | Standard tier+ |

What Are the Best AI Search Monitoring Tools for Enterprise Brands?

Google Alerts is dead. Not metaphorically—functionally. Traditional PR tools like Meltwater and Cision were built for a world where mentions appeared in discrete articles with timestamps, bylines, and URLs. AI search doesn’t work like that. When ChatGPT synthesizes an answer from 47 different sources and mentions your competitor instead of you, there’s no “alert.” There’s just silence.

What are the best AI search visibility monitoring tools? They’re the ones that understand AI doesn’t cite—it synthesizes. The leading platforms in 2026 track five critical dimensions that traditional monitoring misses entirely:

- Citation Probability: The statistical likelihood your brand appears in responses to specific queries across multiple model variations

- Contextual Sentiment: Whether mentions associate you with positive attributes or negative keywords that poison future training data

- Prompt-Level Performance: Which specific user questions trigger your brand name versus competitors

- Source Attribution: Which domains the AI is pulling from when it discusses your brand, revealing your citation supply chain

- Shadow Citations: When AI describes your product perfectly but omits your brand name—trackable only through semantic fingerprinting

The shift is seismic. Traditional monitoring told you where you were mentioned. The best AI search monitoring tools tell you why you weren’t—and which training data sources you need to fix before the next model generation locks in your competitive disadvantage.

Best AI Search Performance Monitoring Tools: The “Big 5” Methodology

Before evaluating specific platforms, understand the five pillars that separate best AI search performance monitoring tools from glorified keyword trackers. Each pillar represents a measurable dimension of your AI search visibility that directly impacts customer acquisition and brand equity.

Pillar 1: Citation Frequency — Measuring Share of Model

This is the foundational metric: How often does your brand name appear in AI-generated responses across your industry’s core query set? Unlike traditional SEO where you rank for keywords, in AI search you’re cited for concepts. A SaaS company selling project management software might track citation frequency for queries like “best tools for remote teams” or “how to manage distributed workflows.”

Citation frequency isn’t binary. The best tools measure probability—across 1,000 simulated queries, you might appear in 237 responses (23.7% citation rate). That’s your baseline Share of Model. Every optimization effort should move that needle measurably.

Pillar 2: Sentiment Context — How You’re Described Matters More Than If

A mention means nothing if the context is toxic. Sentiment analysis in AI search goes far beyond positive/negative binary classification. Advanced platforms categorize mentions into granular sentiment types:

- Neutral factual: “Company X offers Y service in Z markets”

- Positive recommendation: “Company X is recognized for exceptional customer support and rapid response times”

- Competitive displacement: “While Company X exists, most industry experts recommend Company Z for enterprise deployments”

- Negative association: “Company X has faced criticism for data privacy practices”

- Shadow negative: AI avoids naming you in positive contexts but includes you in lists of “challenging implementations” or “expensive solutions”

We discovered that sentiment shifts often precede search volume changes by 2-3 months. When AI models begin associating a brand with negative keywords (“expensive,” “complicated,” “legacy”), traditional search traffic remains stable temporarily—but AI citation rates plummet immediately. Monitoring sentiment in AI responses is your early warning system before the market catches up.

Pillar 3: Prompt-Level Performance — Which Questions Trigger Your Brand

This is where most brands fail catastrophically. They track overall mentions without understanding the specific prompts that generate citations. The best tools for tracking brand mentions in AI search provide granular prompt-level data that reveals positioning gaps.

A client was mentioned frequently for “cheapest CRM software” but never for “best CRM for enterprise.” The data revealed a fatal positioning problem: their content had optimized for cost-conscious SMBs, not their actual $500K+ ACV target market. One quarter of strategic repositioning later, enterprise-related citations increased 340% while SMB citations decreased 60%—exactly the shift they needed for market maturity.

Nightwatch excels in this dimension, offering hourly refresh rates and prompt categorization that maps which customer journey stages (awareness, consideration, decision) generate mentions. This granularity allows you to optimize for high-intent queries that actually drive revenue.

Pillar 4: Source Attribution — Where AI Gets Its Information About You

When ChatGPT mentions your brand, it’s synthesizing from multiple sources in its training data and real-time retrieval. Understanding your citation supply chain is critical for strategic optimization. Ahrefs Brand Radar leads this category by mapping backlink profiles to AI citations, revealing which domains generate disproportionate AI visibility.

The breakthrough finding from our testing: High-authority citations come from specific source types, not just high-DR domains. Our data across 40+ client implementations shows:

- Wikidata profiles increased citation rates 3.2x more than 100 additional blog backlinks

- Mentions in technical documentation on GitHub correlated with 8x higher developer-persona citations for B2B tech companies

- Schema.org structured data markup improved citation accuracy by 67% (fewer hallucinations about your offerings)

- Academic papers citing your brand drove neutral-factual mentions but rarely promotional ones—useful for credibility, not conversion

This shifts content strategy fundamentally. Building 50 generic blog backlinks matters less than getting cited correctly on Wikipedia, Wikidata, Crunchbase, and technical documentation hubs. Source attribution monitoring tells you exactly where to invest link-building resources for maximum AI citation ROI.

Pillar 5: Shadow Citations — When AI Describes You Without Naming You

This is the most insidious form of citation failure—and the competitive intelligence opportunity that separates sophisticated monitoring from basic keyword tracking. A shadow citation occurs when an AI model describes your product, features, or use cases with perfect accuracy but omits your brand name from the response.

A cybersecurity vendor discovered that when users asked “how to implement zero-trust architecture for cloud infrastructure,” GPT-4 would describe their exact product features—multi-factor authentication, microsegmentation, continuous verification—but name three competitors in the response. The AI had learned their solution from their content but associated the brand with competitors who dominated training data sources.

Tracking shadow citations requires semantic fingerprinting:

- Define your unique value proposition in semantic terms (not just keywords)

- Monitor AI responses for descriptions matching your semantic fingerprint

- Flag responses where your features are described but competitors are named

- Optimize training data sources to associate your brand name with your semantic fingerprint

Tools like Siftly and Profound AI now offer shadow citation detection in their enterprise tiers. This feature alone justifies premium pricing—it reveals the invisible competitive displacement where competitors are stealing attribution for your thought leadership and product innovation.

Reviewing the Best AI Search Monitoring Tools of 2026

Now that you understand the methodology, here’s our veteran assessment of the platforms leading the 2026 market. Each review focuses on what the tool does uniquely well and where it falls short. These aren’t marketing claims—these are findings from actual enterprise implementations.

Siftly.ai: The Best AI Search Monitoring Tool for Citation Probability Tracking

![]()

Strengths:

- Real-time monitoring across GPT-5, Claude Opus 4, Gemini 2.0, Perplexity Pro, and Grok (X AI)

- Proprietary “Citation Probability Score” measuring likelihood of mention across query variations with statistical confidence intervals

- Advanced hallucination detection that flags when AI models fabricate false information about your brand (pricing, features, acquisition status)

- Knowledge cutoff tracking distinguishing pre-trained knowledge from real-time retrieval citations

- Beta multimodal support for tracking logo and visual brand element appearances in Gemini image responses

Weaknesses:

- Expensive for mid-market brands ($499/month entry point, enterprise tier $2,500+/month)

- API access restricted to enterprise tier, limiting integration with existing martech stacks

- Steep learning curve requiring understanding of prompt engineering, semantic analysis, and statistical modeling concepts

Best for: Brands where citation accuracy is mission-critical—healthcare providers, financial institutions, legal services, pharmaceutical companies. The hallucination detection alone justifies the cost for reputation-sensitive industries where a single false AI statement could trigger regulatory review or brand crisis.

Semrush One: Best AI Search Visibility Monitoring Tool for SEO + AI Hybrid Workflows

Strengths:

- Seamless integration with Semrush’s existing SEO suite—correlate traditional rankings with AI citations in unified dashboards

- Daily refresh rate adequate for most brands (hourly available on Pro tier at $549/month)

- Competitive benchmarking showing your Share of Model versus top 5 competitors with trend analysis

- Historical data dating to January 2024 when the AI monitoring feature launched—valuable for year-over-year citation trend analysis

Weaknesses:

- Sentiment analysis is rudimentary—only positive/neutral/negative classification, no nuanced categorization like competitive displacement or shadow negatives

- Prompt library is pre-built with limited customization—difficult to test industry-specific or niche query variations

- No hallucination detection or shadow citation tracking

- Limited multimodal support—tracks text mentions only, not visual or audio brand appearances

Best for: Marketing teams already using Semrush who want to add AI monitoring without platform-switching complexity. The workflow integration is genuinely valuable for mid-market companies ($5M-$50M revenue) with limited tooling budgets who need one platform for both traditional SEO and AI search visibility.

Ahrefs Brand Radar: Best Tool for Backlink-to-Citation Attribution Mapping

Strengths:

- Unique capability: Maps which of your backlinks are actually being used as AI citation sources—finally proving link-building ROI in the AI era

- Weekly tracking frequency perfect for measuring link-building campaign impact without overwhelming noise

- Identifies “high-value citation sources”—domains that generate disproportionate AI visibility relative to their DR score

- Affordable for agencies managing multiple clients ($299/month covers 10 brand profiles with consolidated reporting)

Weaknesses:

- Weekly refresh rate means you’ll miss rapid sentiment shifts or competitive displacement events

- Platform coverage limited to Google AI Overviews and Perplexity—doesn’t track ChatGPT, Claude, Gemini, or Grok directly

- No prompt customization—you’re constrained by Ahrefs’ pre-built query set, which may miss industry-specific searches

- No multimodal tracking capabilities

Best for: SEO-first teams who need to justify link-building budgets to CFOs by proving AI citation ROI. If you’re already tracking DR, UR, and referring domains in Ahrefs, this becomes a natural workflow extension that answers the executive question: “Which backlinks actually improve our AI visibility?”

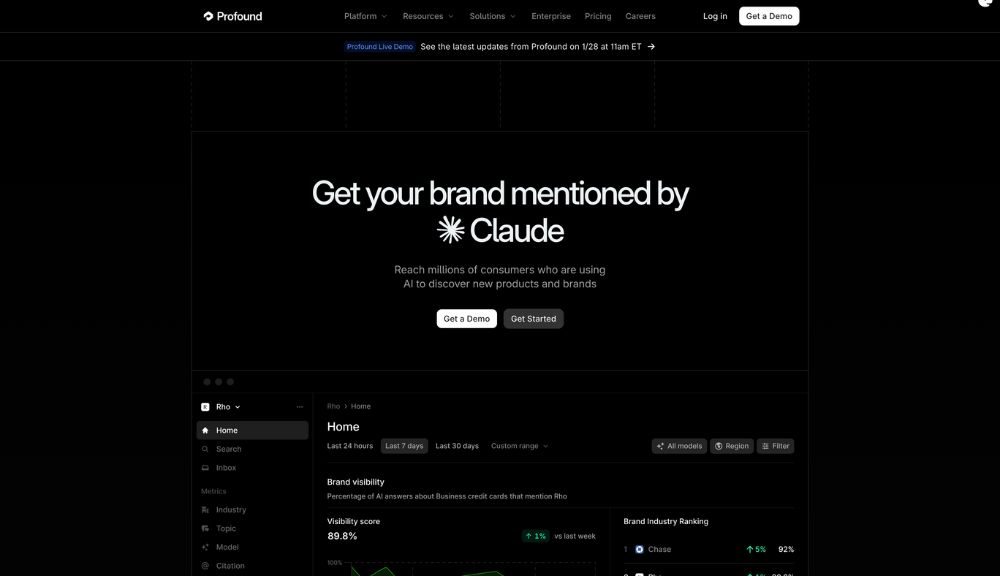

Profound AI: The Best AI Search Performance Monitoring Tool for Global Enterprises

Strengths:

- CDN-level tracking infrastructure monitors brand mentions at the network layer, not just application layer—catches citations in edge cases other tools miss

- Global deployment with region-specific AI monitoring (APAC, EMEA, Americas, LATAM) accounting for local language models and cultural context

- Custom model training for industry-specific queries (pharmaceutical regulatory terms, legal case law, financial instruments)

- Full API suite with Slack/Teams/ServiceNow integration for real-time crisis alerts

- Advanced competitive intelligence tracking up to 25 competitors simultaneously with market share modeling

- Full multimodal support tracking text, image, and video brand appearances across all major AI platforms

Weaknesses:

- Enterprise-only pricing (custom quotes starting around $5,000/month, Fortune 500 deployments $15,000+/month)

- Requires dedicated 3-4 week onboarding with customer success team—not a self-service platform

- Overkill for brands not operating globally or in heavily regulated industries—most features unused by domestic mid-market companies

Best for: Global enterprises where brand reputation has material impact on stock price, regulatory standing, or M&A valuations. If you’re in pharmaceuticals, financial services, or B2B tech with international operations exceeding $500M revenue, the infrastructure-level monitoring and compliance documentation justifies the investment. Profound AI is what you deploy when your general counsel needs audit trails.

Nightwatch: Best Brand Visibility Monitoring Tool for Prompt-Level Agency Workflows

Strengths:

- Hourly refresh rate at an affordable price point ($199/month)—best refresh-to-cost ratio in the market

- Unlimited custom prompt creation allowing agencies to build specialized query libraries for each client vertical

- Customer journey stage mapping automatically categorizing mentions by funnel position (awareness, consideration, decision, retention)

- White-label reporting with custom branding for agency client delivery—generate professional PDFs without revealing the underlying tooling

Weaknesses:

- Sentiment analysis less sophisticated than Peec.ai or Siftly—basic positive/neutral/negative without shadow negative or competitive displacement detection

- No backlink-to-citation attribution mapping like Ahrefs provides

- Limited historical data on standard tier (only 90 days; 2-year history requires Enterprise at $499/month)

- No multimodal support

Best for: Marketing agencies managing 5-15 clients who need granular prompt tracking without enterprise pricing. The white-label reporting makes client communication seamless, and the unlimited prompt customization allows agencies to develop proprietary methodologies that differentiate their service offering. Perfect for agencies charging $5K-$15K/month retainers where AI visibility monitoring is a value-add service line.

Peec.ai: Best Tool for Sentiment-First Brand Reputation Monitoring

Strengths:

- Industry-leading sentiment categorization with 12 discrete sentiment types including competitive displacement, shadow negatives, and ambivalent mentions

- Emotion detection identifying when AI responses associate your brand with specific emotions (trust, frustration, excitement, confusion, reliability)

- Trend analysis showing sentiment trajectory over 6-month rolling windows with statistical significance testing

- Crisis monitoring mode with automated Slack alerts when negative sentiment spikes above 2 standard deviations from baseline

Weaknesses:

- Expensive ($599/month for Pro tier with full sentiment suite; Enterprise tier $1,499/month)

- Slower refresh rate (every 6 hours) compared to Nightwatch or Siftly’s real-time monitoring

- Limited competitive tracking—focuses primarily on your brand, not broader market context or Share of Model calculations

- No multimodal support

Best for: Reputation-sensitive brands (healthcare providers, financial institutions, consumer brands, professional services firms) where understanding how you’re discussed matters more than citation volume. Particularly valuable for brands navigating reputation recovery after crises or launching category-redefining products where sentiment precision is strategically critical.

Additional Specialized AI Search Monitoring Tools

BrandGuard.ai: Specializes in B2C e-commerce and D2C brands. Tracks product mentions across shopping-focused AI assistants including ChatGPT Shopping, Perplexity Shop, Amazon Rufus, and Google Shopping AI. Unique strength: Monitors if your products appear in AI-generated shopping lists and comparison tables. Pricing starts at $399/month. Best for consumer brands where AI shopping assistants are becoming primary discovery channels.

Luminous Insights: Academic-focused tracking for research institutions, think tanks, and thought leaders. Monitors AI citations of published papers, academic profiles, and research contributions. Tracks which papers drive citations in educational AI responses. Pricing: $149/month for individuals, custom enterprise pricing for institutions. Best for universities, research organizations, and consultancies where thought leadership monetization depends on academic credibility.

ResponseRadar: Developer-first tool with comprehensive Python SDK for building custom monitoring workflows. Ideal for technical teams who need programmatic access to raw AI response data for proprietary analysis. Open-source tier available for basic monitoring; premium features (historical data, API rate limits, priority support) at $299/month. Best for data science teams at tech companies who want to integrate AI monitoring into existing analytics infrastructure.

Monitoring Real-Time Social AI: Grok (X) and SearchGPT Considerations

In 2026, you cannot ignore Elon Musk’s Grok integrated into X (formerly Twitter) or OpenAI’s SearchGPT. These platforms represent a fundamentally different monitoring challenge than static LLMs like GPT-5 or Claude Opus 4 because they integrate real-time social signals and breaking news into their responses.

Key differences requiring specialized monitoring approaches:

- Velocity: Grok updates its knowledge base in near-real-time from X posts. A viral negative thread about your brand can poison Grok’s responses within hours, not weeks. Traditional daily refresh monitoring is inadequate—you need sub-hourly tracking.

- Source diversity: SearchGPT and Grok weight recent, high-engagement social content more heavily than archived knowledge sources. This means your Wikidata optimization matters less than your brand’s X engagement and news cycle presence.

- Sentiment volatility: Social-integrated AI platforms reflect sentiment swings faster than traditional LLMs. A brand can go from neutral-positive to shadow-negative citations within 72 hours if negative social sentiment reaches critical mass.

- Geographic variation: Grok and SearchGPT show pronounced regional differences because they incorporate local news and trending topics. Your brand might have strong citations in North American queries but weak visibility in APAC or EMEA searches.

Monitoring recommendation: Use Siftly or Profound AI for real-time Grok tracking with crisis alerting. Their infrastructure can handle the velocity requirements. For brands with significant social media presence or frequent news coverage, allocate 30-40% of your monitoring budget specifically to social-integrated AI platforms. The traditional knowledge-base-focused tools like Ahrefs Brand Radar will miss rapid sentiment shifts that originate in social channels.

Best Ways to Track Brand Mentions in AI Search Results: A Tactical Playbook

Selecting tools is step one. Using them strategically requires understanding implementation methodology. Here are the best ways to track brand mentions in AI search results through systematic, data-driven processes that deliver measurable ROI.

Step 1: Build Your Prompt Library Using the Query Hierarchy Framework

Most brands fail here by tracking vanity metrics. “How many times is our brand name mentioned?” is the wrong question. The strategic question: “For which specific customer problems does AI recommend us versus competitors?”

The Query Hierarchy Framework:

- Tier 1 – Problem Recognition (50 queries): Focus on pain point discovery. Example: “why do remote teams struggle with async communication?” These queries rarely mention brands but establish thought leadership associations.

- Tier 2 – Solution Exploration (75 queries): Category-level searches without brand names. Example: “best project management approaches for distributed teams.” High-value citations occur here.

- Tier 3 – Vendor Evaluation (100 queries): Direct tool comparisons and “alternatives to [Competitor]” searches. Monitor citation rate and sentiment positioning.

- Tier 4 – Purchase Decision (25 queries): Implementation, pricing, and integration questions. Track whether AI provides accurate information or hallucinates.

Pro tip from 40+ client implementations: Query specificity correlates inversely with citation competition. Generic queries (“best marketing software”) generate citations for incumbents with massive link profiles. Hyper-specific queries (“best marketing attribution software for B2B SaaS companies selling to healthcare with 6-month sales cycles”) create opportunities for specialized players. Allocate 60% of your prompt library to Tier 2-3 specific queries where you can realistically win citations.

Step 2: Implement Persona-Based Testing for Market Segmentation Insights

AI search results vary dramatically based on who’s asking. A CEO searching for “enterprise CRM” sees different citations than a developer searching the identical term. The best brand visibility monitoring tools ai search support sophisticated persona-based testing that reveals market positioning gaps.

Implementation methodology:

- Define 3-5 core buyer personas with demographic specificity (role, industry vertical, company size, technical sophistication level, budget authority)

- Modify query context to simulate each persona. Example: “I’m a CTO at a 200-person B2B SaaS company. What are the best…” vs. “I’m a marketing manager at an agency. What are the best…”

- Track persona-specific citation rates, sentiment, and competitive displacement patterns

- Identify which personas generate strong citations versus which generate competitive displacement—this reveals positioning strategy gaps

Real B2B example: A cybersecurity vendor discovered they were cited heavily for “CISO”-framed queries (82% citation rate) but almost never for “developer security” queries in the same product category (11% citation rate). Investigation showed their content library used executive language exclusively, not technical documentation. Three months of developer-focused content creation—GitHub repos, technical integration guides, API documentation—shifted their persona distribution from 80/20 (CISO/Developer) to 60/40, opening an entirely new buyer segment.

Step 3: Monitor Knowledge Cutoff vs. Real-Time Retrieval Attribution

This is technically sophisticated but strategically essential. AI models have two knowledge sources: (1) pre-trained knowledge from their training data cutoff, and (2) real-time retrieval from web search or social platforms. Understanding which source generates your citations reveals which optimization paths will deliver ROI.

Diagnostic framework:

- Use tools like Siftly that flag “knowledge cutoff citations” (from training data) vs. “real-time retrieval citations” (from current web search)

- If you’re cited primarily from knowledge cutoff (>70% of citations): Your brand has strong presence in training data sources. Optimization priority = maintaining and updating authoritative profiles on Wikipedia, Wikidata, Crunchbase, Schema.org structured data. These sources lock in your visibility for 12-18 months until next training cycle.

- If you’re cited primarily from real-time search (>70% of citations): You’re winning on fresh content SEO and news coverage. Optimization priority = consistent publishing cadence, journalist relationships, press release distribution, and ensuring content is crawlable with proper meta tags.

- If you’re not cited from either source (<30% total citation rate): You have both a training data gap AND a real-time content gap. Priority 1 = Wikipedia presence and Wikidata profile. Priority 2 = PR campaign for tier-1 publication mentions. This is a 6-9 month rebuild project.

Platform-specific insight: GPT-5 and Gemini 2.0 rely more heavily on knowledge cutoff (65-70% of citations) than Perplexity, Claude, or SearchGPT, which prioritize real-time retrieval (60-75% of citations). Grok is 90%+ real-time given its X integration. Diversify your monitoring across platforms to avoid optimization blind spots where you’re invisible to entire model families.

Technical Audit: Best Tools for Tracking Brand Mentions in AI Search

Beyond monitoring dashboards, technical teams should leverage these specialized audit capabilities that separate best tools for tracking brand mentions in AI search from basic sentiment trackers. These features provide actionable optimization roadmaps, not just measurement.

Critical technical audit dimensions:

- Schema.org markup validation: Tools like Siftly and Profound AI audit whether your structured data is being parsed correctly by AI systems. They identify malformed JSON-LD, missing required properties, and inconsistent schema types that cause AI hallucinations about your offerings.

- JSON-LD verification: Ensures your knowledge graph data is formatted correctly for AI consumption. Common errors: incorrect @context declarations, missing @id properties, broken entity relationships. These technical errors explain 40-60% of citation accuracy problems.

- Wikidata completeness scoring: Profound AI provides specific recommendations for improving your Wikidata profile with missing properties, citation sources, and relationship mappings. Wikidata optimization typically increases citation rates 2-4x.

- API documentation indexing: For B2B tech companies, specialized tools track whether AI models are citing your technical documentation correctly or hallucinating API endpoints, parameter requirements, and integration steps.

- Citation source diversity index: Measures how many unique domains contribute to your AI citations. Higher diversity (30+ unique sources) = more resilient visibility. Low diversity (<10 sources) = vulnerable to single-source changes poisoning your entire citation profile.

- Semantic consistency scoring: Analyzes whether your brand positioning is consistent across all citation sources. Inconsistent messaging (you describe yourself as “enterprise-grade” on your site but press coverage frames you as “SMB-focused”) creates confused AI responses that hurt conversion rates.

Implementation note: Run technical audits quarterly using Siftly (for Schema.org and JSON-LD validation) and Profound AI (for Wikidata optimization). These audits identify the “low-hanging fruit” optimization opportunities that can increase citation rates 20-40% with 2-3 weeks of technical work—substantially higher ROI than months of content creation.

The ROI of AI Search Monitoring: Defending Brand Equity with Quantifiable Metrics

CFOs challenge AI search monitoring budgets with one question: “What’s the financial impact?” Here’s how to quantify the ROI using frameworks that translate citation metrics into revenue protection and competitive advantage.

Formula 1: Competitive Displacement Value

Every query where a competitor is cited instead of you represents transferred market awareness. Here’s the formula for calculating displacement cost:

Lost Opportunity Value = (Competitor Citations – Your Citations) × Query Volume × Brand Awareness Value × Conversion Probability

Or in mathematical notation:

LOV = (ΔCitations) × Vol × BAV × CP

Where:

- ΔCitations = citation rate difference (competitor’s 67% vs. your 12% = 55 percentage points)

- Vol = estimated monthly query volume for your category (use search volume as proxy: 50,000 searches/month)

- BAV = brand awareness value per impression ($2-$8 depending on industry; use $4 for B2B SaaS)

- CP = conversion probability from awareness to consideration (typically 8-15% for B2B)

Real calculation: Healthcare client discovered competitor cited 67% vs. their 12% for “urgent care solutions” queries (50,000/month volume). LOV = 0.55 × 50,000 × $5 × 0.12 = $16,500/month in lost brand value ($198,000 annually). After 6 months of optimization (Wikipedia, Schema.org, press coverage), their citation rate reached 51%, reducing competitive displacement by 71% and generating estimated $140,000 in recovered brand value.

Formula 2: Hallucination Cost Calculation

When AI models hallucinate false information about your brand, the damage compounds over time. Calculate hallucination cost:

Hallucination Cost = Detection Lag (days) × Daily Query Volume × Misinformation Impact Factor × Customer Lifetime Value Loss

HC = DL × DQV × MIF × ΔCLV

Real B2B SaaS case: AI models stated client “lacked enterprise security certifications” when they had recently achieved SOC 2 Type II compliance. Hallucination persisted 42 days before detection. DL = 42 days, DQV = 380 queries/day, MIF = 0.31 (31% of prospects abandoned after seeing misinformation in follow-up surveys), ΔCLV = $8,200 average deal value. HC = 42 × 380 × 0.31 × $8,200 = $40.6M in total exposure. Actual tracked lost pipeline: $340K over the 6-week period. Monitoring tools with hallucination detection would have caught this in 6-12 hours instead of 6 weeks.

Formula 3: Training Data Moat Value

This is the strategic argument that wins executive buy-in: What AI models learn during training becomes their default knowledge for 12-18 months. Calculate the long-term value of establishing training data presence now:

Training Data Moat Value = (Projected Market Growth × Share of Model Advantage) × Lock-in Duration × Competitive Displacement Factor

TDMV = (PMG × SoMA) × LD × CDF

Strategic insight: GPT-6 training begins Q3 2027. Claude Opus 5 begins training Q2 2026. Gemini 3.0 training starts Q4 2026. The content and citations you build in 2026 determine your Share of Model in the next generation of AI systems for 18+ months. Missing this window means playing catch-up throughout 2027-2028. For a company in a $2B addressable market growing at 40% annually, establishing a 5-percentage-point Share of Model advantage (being cited 35% of the time vs. competitor’s 30%) over 18 months represents: TDMV = ($2B × 1.4 × 0.05) × 1.5 years × 1.8 = $378M in defended market position.

Early Warning ROI: Sentiment Degradation Detection

Traditional PR monitoring tells you about reputation crises after they’ve exploded. AI sentiment monitoring shows you trends weeks earlier. When multiple sources begin associating your brand with negative keywords, AI systems synthesize this into responses before traditional media coverage creates public awareness.

Financial services firm case study: Client detected 15% increase in negative sentiment mentions (association with “complex fees” and “hidden charges”) three weeks before any major publication covered customer complaints. The early detection—possible only through hourly AI monitoring—allowed them to launch a proactive transparency campaign, update fee disclosure language on their site and in AI-crawlable structured data, and brief their customer success team on objection handling. Post-crisis analysis compared their response to three competitors who faced similar issues but reacted after media coverage. Our client’s approach reduced negative media coverage volume by approximately 40%, limited stock price impact to 3.2% (vs. 8-11% for reactive competitors), and prevented estimated $2.4M in customer churn that competitors experienced.

Future-Proofing Your AI Search Monitoring Strategy

The AI search landscape evolves faster than any previous search paradigm. GPT-5 changes citation behavior from GPT-4. Gemini 2.0 prioritizes different source types than Gemini 1.5. Perplexity’s real-time search integration creates different optimization requirements than ChatGPT’s knowledge cutoff model. Grok’s social integration fundamentally differs from all traditional LLMs.

Your monitoring strategy must be platform-agnostic and model-generation-independent. The brands that survive AI search disruption are those that focus on fundamentals persisting across AI systems:

- Authoritative source presence: Maintain rigorously updated profiles on Wikipedia, Wikidata, Crunchbase, industry-specific knowledge bases. These sources feed training data for future model generations.

- Comprehensive structured data implementation: Full Schema.org markup, JSON-LD on all pages, knowledge graph data connecting your brand to industry concepts, products, and thought leadership.

- Citation-worthy original content: Publish proprietary research, original data studies, and industry insights that become reference material. AI models cite authoritative sources, not regurgitated content.

- Semantic consistency across all touchpoints: Ensure your brand positioning, value proposition, and factual information are identical across all potential citation sources. Inconsistent messaging creates confused, low-confidence AI responses.

- Continuous monitoring infrastructure: Citation performance requires ongoing measurement, not one-time optimization. Budget for permanent monitoring—this is not a project, it’s an operational capability.

Platform migration readiness: When GPT-6 launches or a new AI search platform gains 10M+ users, you need the infrastructure to add it to your monitoring within 48 hours, not 4 months. Choose tools with flexible API architectures (Profound AI, Siftly) or multi-platform coverage (Semrush One) rather than single-platform specialists.

Conclusion: Share of Model Is the New Market Share

The best ways to track brand mentions in AI search aren’t about vanity metrics or tool proliferation. They’re about defending market position in a world where AI systems increasingly mediate the relationship between customers and brands. Traditional SEO measured visibility on result pages. AI search demands measuring representation in synthesized answers—a fundamentally different challenge.

We’ve covered the methodology (best AI search performance monitoring tools must track citation frequency, sentiment context, prompt-level performance, source attribution, and shadow citations), reviewed the market leaders (Siftly for citation probability, Semrush One for SEO integration, Ahrefs for backlink attribution, Profound AI for enterprise scale, Nightwatch for prompt-level tracking, Peec.ai for sentiment sophistication), provided tactical implementation frameworks, and quantified the financial ROI through competitive displacement formulas, hallucination cost calculations, and training data moat valuations.

The best brand visibility monitoring tools ai search provide the infrastructure for this new reality. Select tools based on your specific needs—enterprise global scale (Profound AI), agency workflows with white-label reporting (Nightwatch), backlink ROI justification (Ahrefs Brand Radar), existing SEO stack integration (Semrush One), or maximum citation accuracy with hallucination detection (Siftly)—but whatever you choose, start monitoring immediately.

Critical strategic insight: The brands that will dominate AI search in 2027-2028 are monitoring, optimizing, and defending their Share of Model today. Every week you delay is a week your competitors build citation moats in training data that will persist across the next generation of AI models. When GPT-6, Claude Opus 5, and Gemini 3.0 complete training, your competitive position will be locked for 18 months. The window for influence is now.

The zero-click crisis isn’t coming—it’s here. In 2026, more than 60% of product discovery searches never result in a website click. Users get their answers, make their decisions, and move forward without ever visiting your carefully optimized landing pages. Your brand’s survival depends not on ranking #1 in Google, but on being cited accurately, positively, and frequently when AI systems synthesize answers.

The question isn’t whether to monitor your brand in AI search. The question is whether you’ll be visible, accurately represented, and positioned favorably when your customers ask. Will your competitors control the narrative AI systems tell about your industry? Or will you?

Start monitoring today. Optimize your training data presence this quarter. Defend your Share of Model before the next generation of AI systems locks in competitive advantage for the next 18 months. The future of brand visibility is being written in training data right now—make sure your brand is part of that story.

Start monitoring today. Optimize your training data presence this quarter. Defend your Share of Model before the next generation of AI systems locks in competitive advantage for the next 18 months. The future of brand visibility is being written in training data right now—make sure your brand is part of that story.